In my personal opinion, the most dangerous and unpredictable challenge facing Bitcoin is not political pressure, practical usability, scaling, or economic incentive… It is the threat of quantum computers.

Large and powerful organizations - both political and corporate - have been pouring billions of dollars into developing quantum computers for decades. Ever since Peter Shor published his (in)famous namesake algorithm in 1994, cryptographers have been preparing for “Q-Day” - the day a large, practically usable quantum computer becomes available.

If you’re reading my blog, I can assume you are at least roughly familiar with elliptic curve public/private keys. I’ll simply tell you that a quantum computer of sufficient power would be able to flip an EC public key backwards into its private key - a feat thought to be implausible with ordinary classical computers. You can see how this might be a problem. If you’d like to learn how this can be done in detail, check out Shor’s original paper.

This article won’t dissect the mechanics of how quantum computers break Bitcoin public keys, nor is it intended as a doom-and-gloom FUD-piece about how we should all be digging graves, stocking bunkers, and prepping for day zero of the post-quantum meltdown. I won’t bother to conjecture how long it will take for a powerful quantum computer to be invented - Estimates of when this will occur vary widely even between professional analysts. I will simply assume it will happen, one day, eventually. When it does, we must deal with it.

My hope is that this article will show you the landscape of the quantum threats facing the Bitcoin community. Then I’ll explore various hash-based signature schemes and analyze their potential to diffuse these threats, and the tradeoffs which inevitably come with them. Finally, I’ll propose a novel upgrade path for Bitcoin clients which uses hash-based cryptography as a fallback option to insure bitcoin users against future quantum adversaries, without the need for any near-term consensus changes.

All safe roads once were wild.

The Quantum Threat

In this section I’ll dive into the mechanics of how exactly a selfish quantum adversary could attack Bitcoin for financial gain. Understanding the fundamental threat of quantum computers in more detail will lead us to clues as to how we can improve our defenses.

Almost every Bitcoin transaction mined from the genesis block to today has authenticated itself using one or more asymmetric signatures. Sometimes public keys are hashed. Sometimes we use Bitcoin script opcodes to encumber coins with additional validation steps (e.g. time delays). But eventually, to spend a Bitcoin UTXO safely, one must be required to supply an asymmetric signature matching some public key committed to by the UTXO itself. The signature must commit to the spending transaction in some way so that it can’t be applied to a different transaction.

If one were to encumber a transaction output with only a hashlock, or only a timelock, etc, with no asymmetric signature requirement, then that UTXO can be spent by anyone who observes it being spent (including the miners who must see the transaction to validate it).

1 | OP_SHA256 <hash> OP_EQUAL |

A simple hash-lock Bitcoin script pubkey. This script is unspendable until someone reveals the preimage of <hash>. After that, the script could be easily unlocked by anyone.

Bitcoin transactions use signatures on the secp256k1 elliptic curve to achieve this asymmetric authentication dynamic. ECDSA is the older and more well-known signing algorithm, and Schnorr is a cleaner, faster, more secure and more flexible signing algorithm which was adopted several years ago as part of Bitcoin’s Taproot upgrade. Bitcoin’s Schnorr and ECDSA signing protocols both use the same secp256k1 elliptic curve.

ECDSA and Schnorr signing protocols are equally vulnerable to a quantum adversary (QA) who knows a victim’s secp256k1 public key, because the QA can simply invert the public key into the private key, and sign any message they desire.

However, the same QA will have a much harder time computing the preimage (i.e. input) of a hash function given its output. The currently-known best-case-scenario for a QA brute-forcing a hash preimage is a quadratic speed boost, given by Grover’s algorithm. Quadratic in this context means that if a classical computer requires

So not all one-way functions are dead in the water, and cryptographic hashes aren’t the only ones still kicking.

Let’s examine the different address (output script) types in more detail.

Hashed Output Types

(AKA P2PKH/P2SH/P2WPKH/P2WSH)

The most commonly used type of Bitcoin address today is a pay-to-(witness)-public-key-hash address (P2PKH/P2WPKH), in which the public key is first hashed before being given to the payer. When spending this output, the owner signs the transaction and provides the raw public key so that verifiers (Bitcoin nodes) may re-hash it and compare the hash against the output script being spent.

1 | OP_DUP OP_HASH160 <hash> OP_EQUALVERIFY OP_CHECKSIG |

The classic P2PKH locking-script.

In P2SH and P2WSH, the address instead is the hash of a Bitcoin locking script which itself almost always contains at least one ECDSA pubkey, whose signature is required for spending. For the purposes of assessing post-quantum security, script-hash type outputs have effectively the same properties as key-hash type outputs, with the exception that P2WSH outputs are encoded as SHA256 hashes whereas all others are encoded as RMD160 hashes.

1 | OP_HASH160 <hash> OP_EQUAL |

The classic P2SH locking-script.

These four types of addresses currently protect the bulk of the mined and circulating Bitcoin supply.

The threat of a quantum adversary (QA) is in its ability to invert public keys into private keys. If a key-hash or script-hash address has received but not spent any of its coins, then the preimage of that hash (the key or the script) hasn’t been revealed yet. Barring out-of-band exposure (e.g. via BIP32 xpubs leaked to servers or peers), the QA generally won’t be able to attack such addresses practically.

This protection ends the moment the owner of the key/script-hash address tries to spend any of their coins. To validate a transaction spending a pay-to-*-hash output, the Bitcoin network peers must be given the key/script preimage. A quantum adversary could easily monitor these transactions and see that preimage, which always contains a public key the QA can invert. After inverting, the QA can try to double-spend the coins with a new higher-fee transaction.

Gracefully, the QA will have a limited time in which to mount this attack. A typical Bitcoin TX spends only 5 to 60 minutes of its public existence in the mempool before it is mined, at which point the coins will have moved permanently to a new address. If that new address is protected by a hash whose preimage the QA does not know, then the coins will be out of reach for the QA.

However, a significant fraction of key-hash and script-hash addresses are being reused on mainnet today. A reused address is one which receives one or more UTXOs, spends them, and having revealed their public key or spending-script, is still being used to custody additional Bitcoin UTXOs. Some estimate the amount of bitcoin held on such reused addresses was at least 5 million BTC as recently as 2019. A quantum adversary has much more time to compute the public keys exposed by these addresses. Time is on the side of the QA here; The burden of action is squarely on the shoulders of the owner to move their coins to a fresh unused address.

There is also the possibility of a large mining pool becoming a quantum adversary. Such a pool could delay mining certain transactions intentionally, to give themselves more time to crack a newly discovered public key.

Summary

P2PKH/P2SH/P2WPKH/P2WSH output script types can be reasonably secure against a QA if used properly, provided the QA needs a decent amount of processing time (more than an hour) to invert a secp256k1 public key.

The problem is that proper usage is hard. Ava Chow describes the numerous pitfalls which plague key-hashing as a countermeasure against a quantum adversary.

P2TR Outputs

In P2TR addresses, an internal public key

When spending, the bearer can either authenticate directly by signing as the output pubkey

The critical distinction between P2TR and the legacy pay-to-*-hash output script types is that taproot encodes a secp256k1 point directly in the output script. This means a quantum adversary (QA) can invert any P2TR output pubkey

This is not a new observation, there has been much discourse and controversy surrounding this property of the taproot upgrade.

- https://freicoin.substack.com/p/why-im-against-taproot

- https://lists.linuxfoundation.org/pipermail/bitcoin-dev/2021-March/018641.html

- https://bitcoinops.org/en/newsletters/2021/03/24/#discussion-of-quantum-computer-attacks-on-taproot

Summary

Against a quantum adversary, P2TR addresses are comparable in security to an already-reused key-hash address. The QA has unlimited time to invert the output pubkey and can start attacking any P2TR output from the moment they learn of its output public key

Note on Multisig

P2SH or P2WSH addresses which use OP_CHECKMULTISIG cause a linear increase in the processing time needed for a QA to fully compromise the address. For an

This slowdown will probably be offset by parallel-processing across multiple quantum processors, or with parallelized quantum algorithms which invert multiple public keys at once.

P2TR multisig addresses which use key aggregation do not have this property, because the taproot output pubkey

Note on BIP32

Most consumer wallets derive their addresses using BIP32 hierarchical-deterministic (HD) wallet schemes. This allows a single seed to derive basically unlimited secret keys and corresponding public addresses for any script type. Wallets will sometimes share their extended public keys to avoid the need to communicate many individual child addresses.

To a quantum adversary, a BIP32 extended public key (xpub) is equivalent to its counterpart extended private key (xpriv) given enough time to invert the xpub. Once inverted, the QA can quickly derive all child addresses, and can thereafter monitor the blockchain to steal any funds paid to them.

To comply with BIP44 standards, most wallets derive one xpub/xpriv pair at specific BIP32 paths for each Bitcoin address type.

m/44'/0'/n'for P2PKHm/49'/0'/n'for P2SH-wrapped P2WPKHm/84'/0'/n'for native P2WPKHm/86'/0'/n'for P2TR

Each layer of derivation in these key paths after the master key (m) is hardened, meaning that deriving that child key involves hashing the parent secret key.

Remember QAs cannot invert a hash function as efficiently as they invert elliptic curve public keys. This means that the BIP32 master key m, as well as the xprivs at any previously unused key path, are generally safe from the eyes of the QA provided the wallet has not exposed the BIP32 master public key. We will make use of this property in some of the options we discuss later.

Quantum-Safe Signatures

The critical question is: What cryptographic mechanism replaces elliptic curve digital signatures?

Whatever mechanism replaces EC signatures must be asymmetric, allowing a user to give payment instructions to a prospective sender, without surrendering control of their money to the sender. This way the receiver can claim that payment later without revealing their signing credentials to anybody (like one would with a password to a bank website). This rules out symmetric cryptographic schemes, such as HMAC, simple hashing, or symmetric encryption algorithms like AES or ChaCha.

We have numerous quantitative metrics by which to judge a replacement algorithm:

- Public key size

- Signature size

- Secret key size

- Key generation time

- Signature computation time

- Signature verification time

We also have qualitative statements we can make about a signing algorithm:

- Statefulness: Does a signer need to maintain state between each signature?

- Reusability: Can a signer reuse their secret key to sign multiple messages?

Today we’re going to explore one genre of signing algorithms which is almost certainly the safest and most conservative candidate, but which comes with some big practical issues: Hash-based signatures, or HBS for short.

Hash-based signature schemes are constructed from a simple one-way cryptographic hash function like SHA256. Secret keys are usually vectors of preimages. Public keys are usually hashes, or vectors of hashes. Signatures are usually vectors of preimages, paired with auxiliary data needed to verify the preimages correspond to a specific static public key. Unlike other candidates for post-quantum cryptography, hash-based signatures do not rely on new (possibly flawed) cryptographic assumptions - This is what makes them the conservative option.

I’ll now give a detailed description of several well-known hash-based signature algorithms, in increasing order of complexity. If I lose you in some of the math, don’t worry - Understanding the internals of the HBS schemes is not critical to understanding how they can be applied to Bitcoin.

Skip through to the final analysis section if you don’t care about the technical details of HBS schemes.

Before we begin, some notation:

| Notation | Meaning |

|---|---|

| The set of all possible bit strings of length |

|

| Uniform random sampling of an element |

Let

I began studying these hash-based signing protocols only a few weeks ago. Do not take my technical descriptions below as precise and safe for real-world use, because I have probably made typos or omitted stuff. The purpose of these descriptions is to illustrate and convey the essence of the algorithms, not to provide any concrete implementation instructions.

Roll your own crypto at your own peril.

If you are an expert in hash-based signatures and have noticed a typo, please email me or open a pull request to fix it.

Let’s start with the simplest and earliest-known example of an HBS scheme: Lamport Signatures.

Lamport Signatures

We want to sign a single bit of information - 0 or 1 - using our hash function

We generate two random preimages of

The tuple

Our public key is the tuple

We may generalize this approach to sign message strings of arbitrary bit length

Each

Our public key is made of hashes of every preimage.

To produce a signature

To verify, simply check that the hashed preimages match the public key for every bit

Properties

Lamport Signatures are a one-time signature (OTS) protocol - once the signer reveals a signature for a given public key, they can never use the same signing key again for a different message. If they do, the signer gives observers the ability to forge signatures on new messages they never consented to sign.

For instance, if

Signatures have bit length

Signing is simple, as it consists only of revealing secrets. Verification requires

Modifications

We can modify lamport signatures to improve the amount of space consumed by keys and signatures at the cost of some computation time.

Deterministic key-gen: Instead of randomly sampling our secret key preimages, we can derive them from a root secret

This way we only need to store a single 256-bit secret preimage for each Lamport OTS key, instead of

Compact public keys: We can reduce the size of public keys from

However, we must now adjust our signature algorithm to supply not only the

Signatures double in size to

Verifiers can verify

Comparisons

The following table shows some concrete examples of time/space tradeoffs for Lamport signatures. In these examples, I’ll set

| Algorithm | Signature size | Public key size | Secret key size |

|---|---|---|---|

| Vanilla Lamport | |||

| Compact Lamport |

Below I note the time complexity of Lamport signatures in terms of the number of invocations of the hash function

| Algorithm | Keygen time | Signing time | Verification time |

|---|---|---|---|

| Vanilla Lamport | |||

| Compact Lamport |

Viability for Bitcoin

I expect the Compact Lamport signature approach would be the most practical flavor of Lamport Signatures, as public keys would be the same size or possibly smaller than a P2TR pubkey is today.

However, even the most space-efficient Lamport signatures with the lowest viable security levels (

There’s also the matter of key reuse. Lamport signatures are a one-time signature scheme. If a Bitcoin user spends from a hypothetical “pay-to-lamport-public-key” address, they’ll never be able to spend from that address again without giving observers some power to forge signatures.

Power users would not be affected greatly, as the best-practice touted by most experienced Bitcoiners is to use addresses only once, for a single receive/spend cycle. Yet, as we’ve seen earlier, a huge fraction of bitcoiners by volume have either never heard this advice, or have disregarded it. It will be difficult to convey to these users that address reuse with Lamport signatures will be more than just a privacy no-no - It could seriously compromise their security. This would make life especially difficult for cold-storage hardware signing devices, which rely on a semi-trusted host machine to prompt them with transactions to sign.

Lamport signatures are stateful, in that a signer should know if he has used his private key to sign a message before to know whether signing again could be dangerous.

Lamport signatures are not backwards compatible with Bitcoin’s consensus protocol as it exists today, although an outlandish scheme has been proposed by Ethan Heilman which make use of OP_SIZE to Lamport-sign the size of an ECDSA signature. Whether this approach is secure is still up for debate. But even with such creative invention, the size of such a signature would prohibit its usage in P2WSH addresses. At least with current knowledge, a soft-fork would be needed to add Lamport signature support.

Even after such a soft fork, existing addresses with exposed public keys would still be vulnerable to a quantum adversary. Users would need to actively migrate coins to a hypothetical pay-to-lamport-public-key address format.

Winternitz One-Time Signatures (WOTS)

Winternitz signatures rely on a chain of hashes starting from a set of secret preimages. By revealing certain intermediate hashes in that chain, the signer can sign a specific message.

In comparison to the Lamport scheme, WOTS shoulders a higher computational burden in exchange for much shorter signatures. Let’s see how they work.

First, let’s re-establish some parameters:

- Let

be the bit-length of the message we want to sign. - Let

be the “Winternitz parameter” (used for time/space tradeoffs). - Let

be the bit-group width into which we parse the message. - Let

be the length of the message in digits of base . - Let

be the checksum length in digits of base . - Let

be the overall length of the message plus the checksum in digits of base .

As WOTS relies on hash-chains, we need a hash-chaining function

So for instance,

Now we’re ready to generate our keys. We start by sampling

To compute the Winternitz public key

To sign a message

We compute a checksum

Because

We append this checksum array

To sign the checksummed-message

A verifier given the plain message

If this holds for all

Properties

Security. Note the special role played by the checksum. Without it, revealing one set of intermediate hashes should allow an observer to forge signatures on any message in a “higher” position in the hash chain.

For instance, consider the following diagramed example.

If we don’t add a checksum to the two-digit message

Because of the checksum construction, increasing any message digit

Size. Signatures, public keys, and secret keys all have bit length

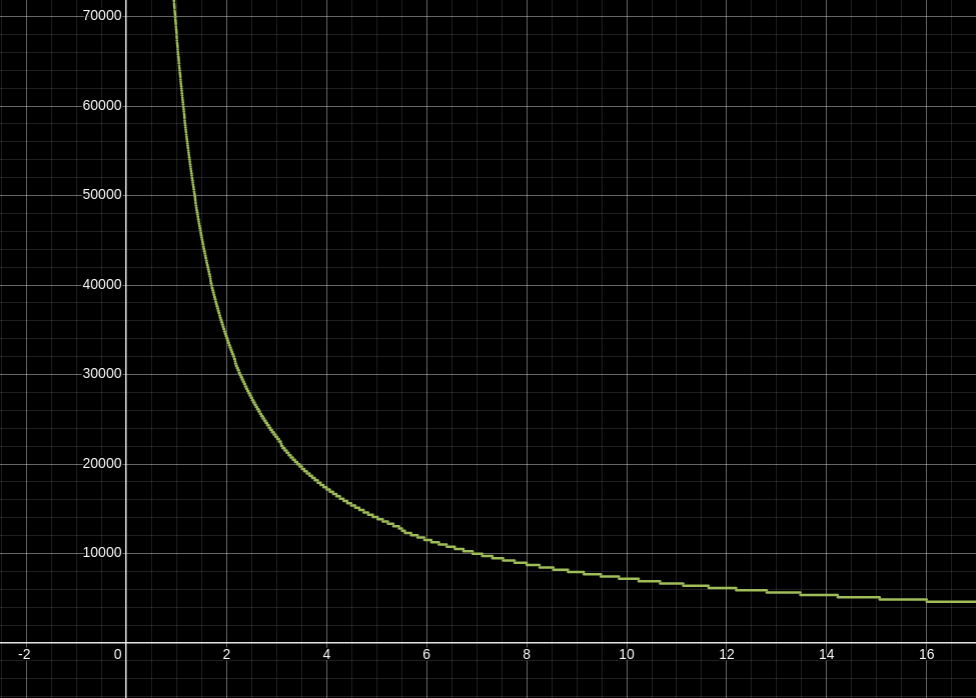

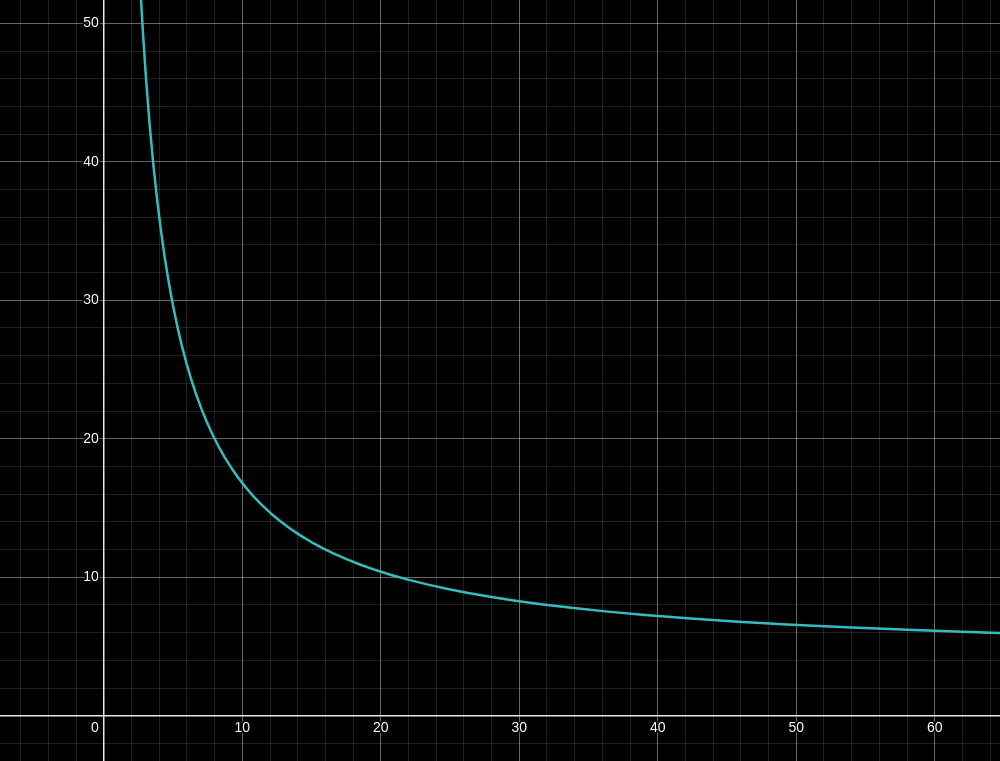

In case that equation seems unintuitive to you, you’re not alone. I plotted the relationship between the time/space tradeoff parameter

Higher values of

Approaching anywhere near that limit would be wildly impractical though, because by increasing

Runtime. Given a secret key

Public key generation uses the same hash-chaining procedure as signing/verifying. To sign or verify a signature, we must compute at worst

To avoid this exponential blowup in computation time, most real-world WOTS instantiations keep

X-axis is

For reference, my weedy little computer can generate a WOTS public key with

Curiously, due to the zero-sum nature of the hash-chain signature construction, signers and verifiers who are able to select the message they’re signing can also influence the amount of work they and their counterparty must perform. By selecting a message digit

Modifications

Compact public keys: WOTS lends itself well to compacting public keys via hashing. A Winternitz signature gives the verifier everything they need to reconstruct the signer’s public key

This decreases the public key size from

Deterministic key-gen: Like with Lamport signatures, WOTS secret keys can be generated from a seed with only

Smaller and more secure signatures: To improve performance and security, one should consider using WOTS+, a variant of WOTS which adjusts the hash-chain construction to XOR each of the intermediary hashes in every hash chain with a randomization vector. This odd choice allows the security proof to reduce forgeries to second preimage extraction: If someone can forge a WOTS+ signature, they can also break the second-preimage resistance of

This means we can get away with using WOTS+ with smaller, faster hash functions which have broken collision resistance but are still believed to be second-preimage resistant, as long as one can accept the lower security level implied by their shorter output. Or WOTS+ can be instantiated with larger hash functions, and for only a modest increase in signature size and verification complexity, we gain the added security of weaker assumptions.

WOTS+ relies on a set of

Instead of computing the intermediate values of the hash-chains as

For instance,

WOTS+ appends the tuple

The compact WOTS+ public key

More efficient signatures using brute-force compression: This paper suggests modifying the WOTS signing algorithm so that the signer repeatedly hashes the input message

This allows the signer to omit the checksum portion of the WOTS signature, because the checksum is verified to always work out to a fixed static value. Messages without this fixed checksum are not valid, and so the checksum itself does not need to be signed. This approach is called “WOTS with Compression” (WOTS+C).

The effect of this compression is to reduce the overall runtime of the scheme on average across keygen, verification, and signing, while also reducing signature size by removing the

The authors of WOTS+C even propose ways for the signer to further reduce the size of their signature by removing additional chains from their signature, at the cost of additional work to find an appropriate message salt.

Comparisons

The following table shows some concrete examples of time/space tradeoffs for various kinds of Winternitz signatures. In these examples, I’ll set

| Algorithm | Signature size | Public key size | Secret key size |

|---|---|---|---|

| Vanilla WOTS |

|||

| Compact WOTS |

|||

| Compact WOTS |

|||

Compact WOTS |

|||

| Compact WOTS |

|||

| … | |||

| Vanilla WOTS+ |

|||

| Compact WOTS+ |

|||

| Compact WOTS+ |

|||

Compact WOTS+ |

|||

| Compact WOTS+ |

|||

| … | |||

| Compact WOTS+C |

|||

| Compact WOTS+C |

|||

Compact WOTS+C |

|||

| Compact WOTS+C |

Below I note the worst-case time complexity of each flavor of Winternitz signatures in terms of the number of invocations of the hash function

For the sake of brevity, let

| Algorithm | Keygen time | Signing time | Verification time |

|---|---|---|---|

| Vanilla WOTS | |||

| Compact WOTS | |||

| Vanilla WOTS+ | |||

| Compact WOTS+ | |||

| Compact WOTS+C |

The additional

*

Viability for Bitcoin

Winternitz signatures are significantly smaller than Lamport signatures. With compaction and deterministic key-generation, the public and private keys can be condensed down to the same constant size (

Compact WOTS+ with

However, like Lamport signatures, WOTS is a stateful one-time signature scheme, so it inherits all the same security concerns as Lamport signatures regarding address reuse ergonomics.

Hash to Obtain Random Subsets (HORS)

HORS is a few-time signature protocol based on Lamport Signatures which relies on complexity theory to make signatures smaller and provably resilient to forgery.

To generate a HORS key, the signer samples some large number

To sign a specific message, the signer reveals a specific subset of

These parameters also affect the security of the HORS scheme. With

The key ingredient of HORS is a subset selection algorithm

The HORS paper suggests two bijective functions which fulfill the subset-selection role of

However these bijective functions are not one-way functions, so if a signer provides two or more signatures from the same key it is trivial for an observer to forge new signatures by working backwards to determine which messages they can now sign using the signer’s revealed preimages.

To address this, HORS instead uses a one-way hashing function

Because the output of

After computing

A HORS verifier with a public key can then verify the message by recomputing

Properties

Unlike previous signature schemes, using a HORS signing key to sign more than one message does not immediately compromise the key’s security, it merely reduces security. An attacker who sees two or more signatures from the same secret key will need to brute-force the hash function

The signer still has to be cautious though, because as the attacker sees more signatures, their guess-and-check attack will get progressively easier. The HORS paper describes exactly how much easier. The following expression gives the bits of security for a HORS public key after an attacker has seen

Usually

Size. HORS signatures have bit size

If we fix

X axis is the signature size

Runtime. The HORS keygen procedure requires

Modifications

Deterministic key-gen: As with previous examples, we can derive the HORS secret key preimages from a single

Compact public keys: A modified version of HORS called “HORS with Trees” (HORST) was proposed by the authors of the SPHINCS signature scheme. Instead of representing the public key plainly as

Comparisons

The following table shows some concrete examples of time/space trade-offs for HORS signatures. In these examples, I’ll aim for a security level of approximately

| Algorithm | Signature size | Public key size | Secret key size |

|---|---|---|---|

| Vanilla HORS |

|||

| Vanilla HORS |

|||

| Vanilla HORS |

|||

| Vanilla HORS |

|||

| Vanilla HORS |

|||

| Vanilla HORS |

|||

| Vanilla HORS |

|||

| Compact HORST |

|||

| Compact HORST |

|||

| Compact HORST |

|||

| Compact HORST |

|||

| Compact HORST |

|||

| Compact HORST |

|||

| Compact HORST |

Below I note the time complexity of HORS signatures in terms of the number of invocations of the hash function

| Algorithm | Keygen time | Signing time | Verification time |

|---|---|---|---|

| Vanilla HORS | |||

| Compact HORST |

The exact amount of bits by which the HORST signature size grows (and thus verification time as well) is determined by the function

Viability for Bitcoin

HORS is conceptually refreshing, because it demonstrates that hash-based signatures can be quantifiably secure even when secret keys are reused. Since Bitcoin signing keys are typically only used a few dozen times at most, a few-time signature scheme like HORS might be acceptable for the vast majority of Bitcoin use cases, especially if each signature decreases security by a predictable and incremental amount, rather than the all-or-nothing security risks of reusing an OTS secret key (like with WOTS or Lamport).

However Vanilla HORS compromises significantly on public key sizes, while the more compact HORST experiences huge increases in signature key sizes as a trade-off. By comparison, Compact WOTS provided public keys of the same size with signatures roughly a quarter of the signature size HORST has. Safely supporting additional signatures (

HORS is not a stateful signature scheme, but the signer should know roughly how many times they have used a given key pair so that they can avoid over-exposing their key. On the coattails of this assumption ride the same security issues as WOTS and Lamport signatures regarding address reuse in a Bitcoin context. The problem with HORST is really that to make a key secure for reasonably large numbers of signatures, we must make our parameters very large which inflates either the keygen/signing runtime, or the signature size.

Forest of Random Subsets (FORS)

FORS is another few-time signature scheme based on HORST (discussed above). It was first introduced as a component within the SPHINCS+ paper in 2017, but it deserves a definition on its own as it improves on HORST to reduce signature sizes dramatically.

The primary distinction between HORST and FORS is that FORS public keys are represented as the hash of several merkle trees - hence the use of the term “forest”. Each leaf node of these trees is, like HORST, the hash of a secret preimage, and we sign data by revealing specific preimages along with their merkle tree membership proofs.

Let

This construction gives us

To sign a message

FORS is designed to generate the secret preimages from a small seed, giving constant secret key size. Given a secret FORS seed

Note especially that the subset selection algorithm

Properties

Security. Like its predecessors, FORS is a few-time scheme which means the signer can confidently quantify the security of their public key even after revealing some signatures. The bit security of a FORS public key against a signature forging attack (with attacker-chosen messages) after revealing

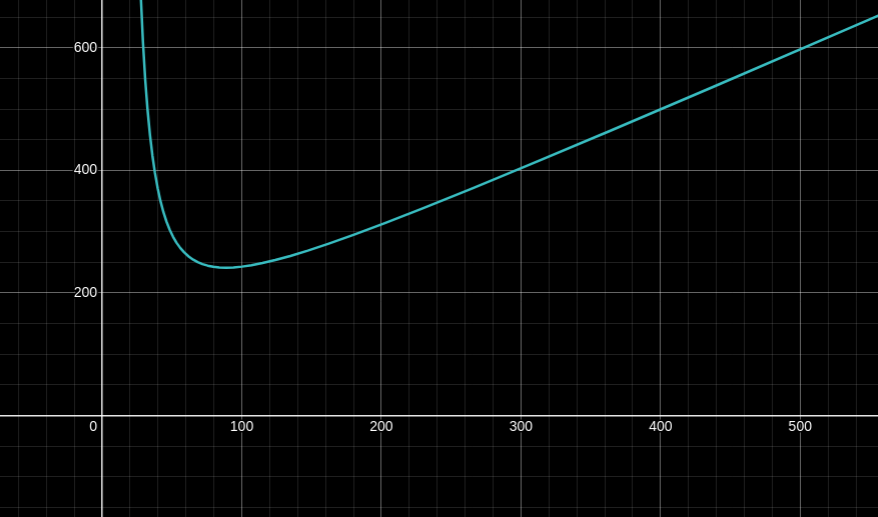

By fixing a security level of

X axis is

For instance, with

Size. Public and secret FORS keys are both a constant size of

A FORS signature consists of

Runtime. Key generation requires deriving

Signing requires a call to

If on the other hand the signer starts from just the seed (pre-keygen), then to construct a merkle proof for a single tree, they must first derive the

The verifier’s runtime is much smaller. The verifier must run

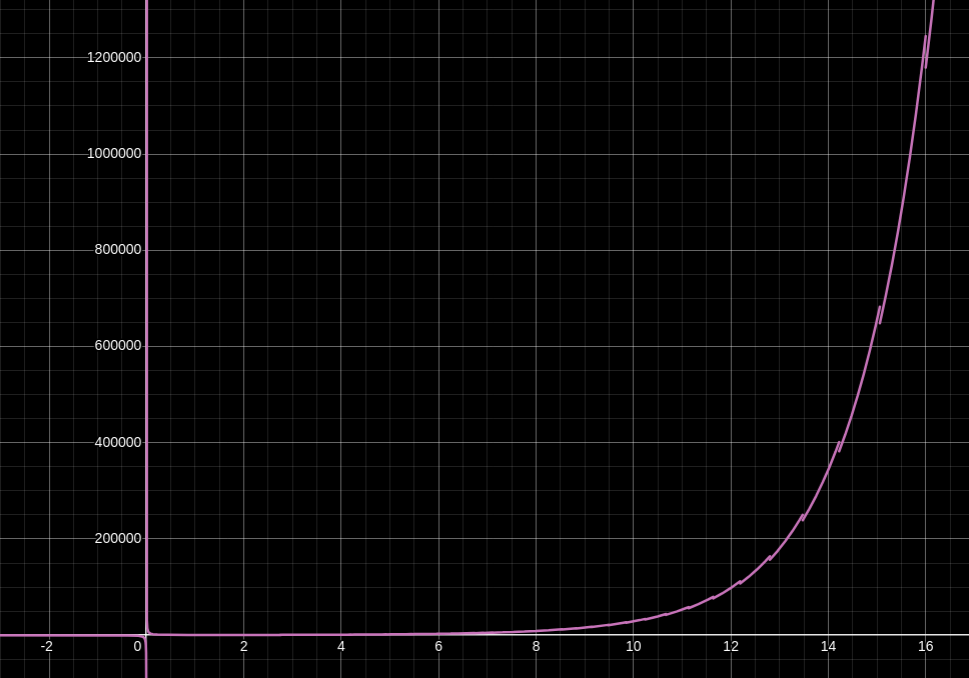

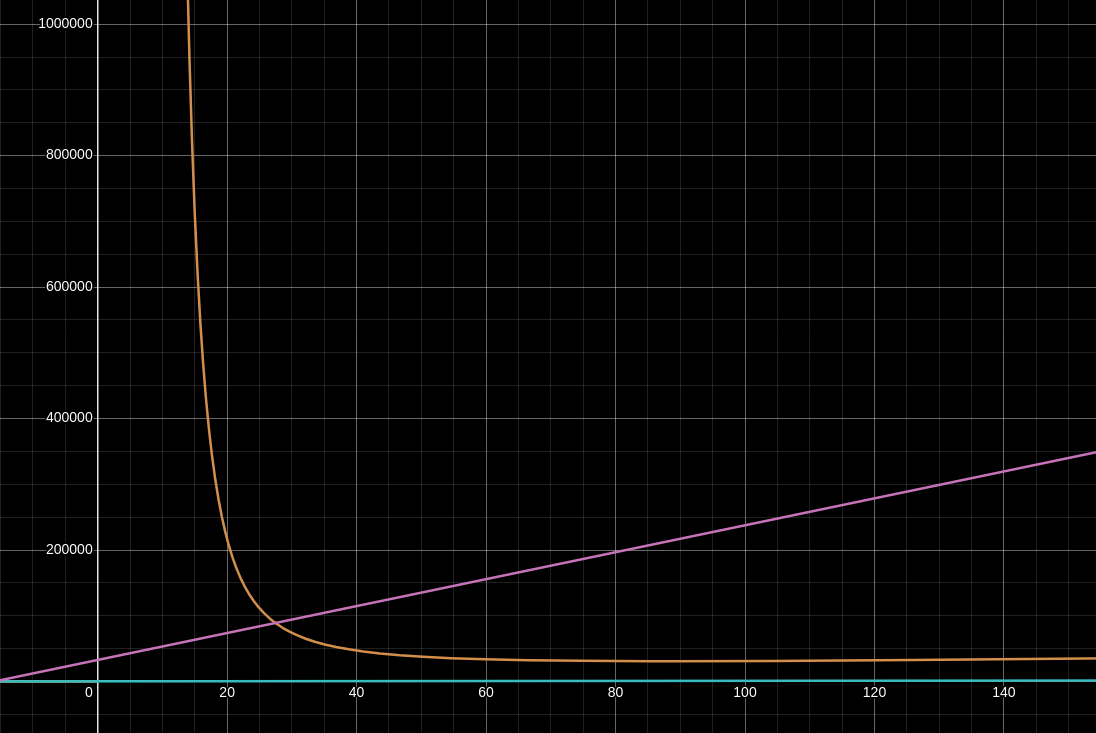

Check out the following graph which compares the time/space performance of FORS signatures. For this visualization, I fixed the FORS security parameters

Along the X axis is an input

- The orange curve is

, representing the total number of preimages the signer must generate and hash into their public key. This curve is directly proportional to both key-generation runtime and signer runtime (or memory usage if caching is used), but it does not affect public/secret key size. - The pink line is the signature bit-size

, which grows linearly with . - The blue line hugging the X axis is the verifier’s runtime

. It grows linearly with , but for all reasonable choices of the verifier needs to evaluate at most a thousand hashes or so.

This suggests that for the

Playing around with the parameters a little, we can see that using fewer but larger trees (lower

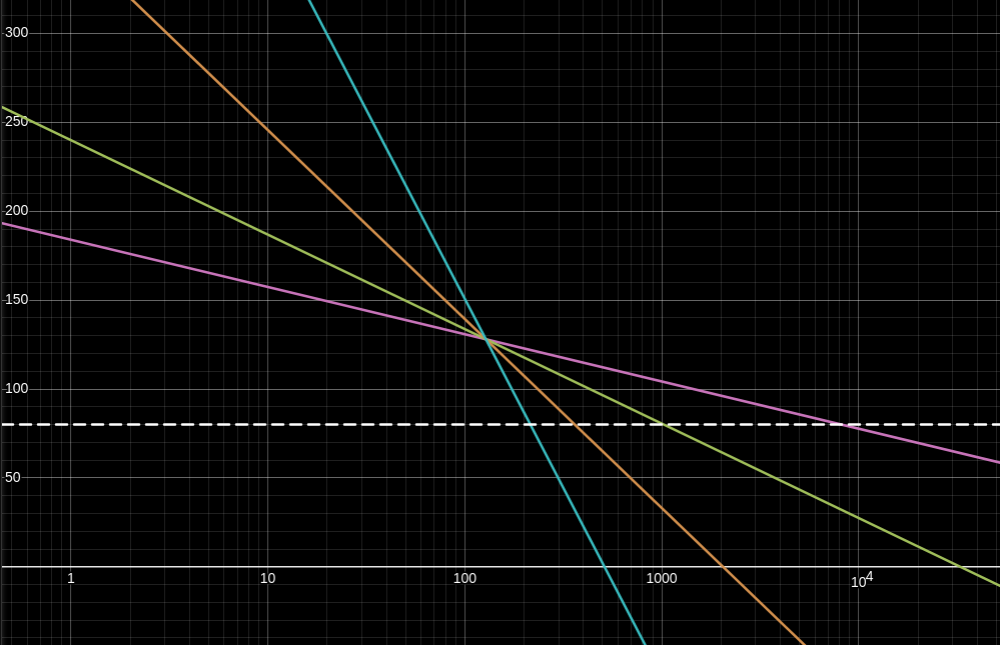

See the following visualization with

On the Y axis is the expected security level

- The pink line is FORS with

, . - The green line is FORS with

, . - The orange line is FORS with

, . - The blue line is FORS with

, . - The white dashed line is

bits of security, which represents an approximate boundary below which forgery might be practically achievable for currently available hardware.

Note how the

Modifications

Security against adaptive chosen-message attacks: The authors of this paper suggest modifying the FORS signing algorithm to make the subset-selection function

More efficient signatures using brute-force compression: This paper suggests modifying the FORS signing algorithm so that the signer repeatedly hashes the input message

Because

The effect of this modification is to reduce signatures size by approximately

The authors have also noted that if the signer wishes to further reduce signature size, they can perform additional work to reduce the size of the 2nd-to-last tree by forcing more trailing bits to hash to zero.

Intuitively, this compression mechanism has a larger impact when the trees are larger (when

Smaller signatures using Winternitz chains: This paper suggests extending the leaves of each FORS tree with a chain of hashes, much like the Winternitz OTS scheme. This extra dimension gives a new parameter, the length of the Winternitz chains

More possible signatures for the same size message means in theory that signatures should be more secure. This modified scheme is called “Forest of Random Chains” (FORC). One could think of standard FORS as a FORC instance with chains of length

The authors demonstrate that this extension improves reduces probability of two signatures colliding within a tree from

Unfortunately the FORC authors did not provide an explicit formula for the bit-security of FORC after

Click here to see my derivation

Recall the FORS security level of

Convert this to the probability that

With FORC, the inner per-tree probability

Converting back from a probability into bits of security:

Use the logarithm identities

Thus, the bits of security we receive by extending the leaves of a FORS tree with Winternitz chains of length

After working it out, FORC’s security level is

This improves on standard FORS by exactly

For a given set of security parameters

The savings diminish quickly as

In exchange for these security/size savings, FORC signers must process the hash chains for each of the FORS leaves. Keygen and signing runtime is multiplied by a factor of

Comparisons

The following table shows some concrete examples of time/space trade-offs for FORS signatures. In these examples, we will fix a security level of

| Algorithm | Signature size | Public key size | Secret key size |

|---|---|---|---|

| FORS |

|||

| FORS |

|||

| FORS |

|||

| FORS |

|||

| … | |||

| FORS+C |

|||

| FORS+C |

|||

| FORS+C |

|||

| FORS+C |

|||

| … | |||

| FORS |

|||

| FORS |

|||

| FORS |

|||

| FORS |

|||

| … | |||

| FORS+C |

|||

| FORS+C |

|||

| FORS+C |

|||

| FORS+C |

|||

| … | |||

| FORS |

|||

| FORS |

|||

| FORS |

|||

| FORS |

|||

| … | |||

| FORS+C |

|||

| FORS+C |

|||

| FORS+C |

|||

| FORS+C |

I have omitted concrete sizes for the FORC variant of FORS, as they would not be fairly comparable. We would need to use a slightly higher or slightly lower security bit-level

Below I note the time complexity of FORS signatures in terms of the number of invocations of the hash function

| Algorithm | Keygen time | Signing time (worst case) | Verification time |

|---|---|---|---|

| FORS | |||

| FORS+C | |||

| FORC |

Viability for Bitcoin

The FORS protocol allows for much more robust keys than HORST. The same size signatures provide much stronger few-time security, permitting more signatures per key before exhaustion. FORS is also a well-studied protocol, with many variants and higher-level protocols based on it.

This few-time scheme might be a possible candidate for usage on Bitcoin, if but for the large signature sizes. Even the smallest FORS+C signature with

Combining the principles of FORS+C and FORC could result in smaller signatures and merits further investigation, but this seems unlikely to shave more than beyond a few thousand bits off of each signature.

Merkle Signature Scheme (MSS)

MSS is our first example of a “many-time” signature (MTS) scheme, in contrast to the one-time or few-time schemes we’ve seen until now. It was first proposed by Ralph Merkle in 1979 (the same man after whom the “merkle tree” data structure itself is named).

The remarkably simple premise of MSS is this: By unifying many one-time signature (OTS) public keys as the leaves of a merkle tree, we can use each OTS key only once, but succinctly prove any OTS key’s membership in the merkle tree’s root hash. Our master public key is then defined to be the root hash of this merkle tree. If

The signer first samples

There are many flavors of MSS, the most well-known of which being the Extended Merkle Signature Scheme, or XMSS, which instantiates MSS using Winternitz as its one-time signature scheme. The next candidate protocol, SPHINCS, may also be argued to be an instantiation of MSS, though SPHINCS is so radically evolved from Merkle’s original scheme that it might be best to group it into a completely separate class of its own.

Due to the promising candidate which follows, I will omit any specific long-winded protocol explanations here. If you would like a detailed example of an MSS protocol, I highly recommend reading the XMSS paper.

Viability for Bitcoin

Although MSS schemes give us our first tantalizing glimpse at truly scalable hash-based signatures, they still suffer from the effects of statefulness. To safely use any Merkle Signature Scheme, the signer must always remember which leaf OTS keys they have or have not used - usually implemented with a simple counter. If they lose this state, the signer cannot safely continue using the key. If the signer were to issue more than one MSS signature with the same state, it could compromise the leaf OTS key, which in turn compromises the whole key.

However, the principle of MSS is incredibly flexible, which is why so many modern hash-based signature protocols are based on the core ideas of MSS, including the next one.

SPINCS+

SPHINCS+ is a state-of-the-art hash-based signature system which leverages concepts from the previous signature schemes I’ve described earlier. SPHINCS+ is currently the only hash-based signature (HBS) protocol to have been finalized by NIST as an approved post-quantum digital signature standard. In NIST’s terminology, SPHINCS is referred to as “SLH-DSA”, or “StateLess Hash-based Digital Signature Algorithm”. You see, SPHINCS is also special because unlike most many-time signature (MTS) schemes which use hashes, SPHINCS does not require signers to keep state.

SPHINCS+ key-generation starts from three secret random values:

The signer constructs their public key by building an MSS tree deterministically from

If one were to use these WOTS+ keys to simply sign messages directly, then this is just a particular flavor of MSS, but SPHINCS takes things further.

Instead of signing messages, each of the WOTS+ leaf keys are used to certify a child MSS tree, each of whose leaves are also WOTS+ public keys, and so on, eventually terminating after

The advantage of this approach, rather than a simple MSS tree, is that to sign a message with the bottom-level leaves of the hyper-tree, the signer does not need to re-derive the entire hyper-tree - they only need to derive the specific certified trees along the path from leaf to root. All other trees can be ignored.

The final

The entire SPHINCS+ signature is a package, consisting of:

- a FORS signature on the actual message

WOTS+ certification signatures merkle tree membership proofs - one for each WOTS+ certification pubkey - a randomizer

(explained below)

See the following diagrammed example with

The components of the SPHINCS signature (plus the message) allow a verifier to reconstruct the root node of the hypertree, which is then compared to the root tree node hash in the SPHINCS public key,

why mix two signing algorithms? why not use only FORS?

SPHINCS uses WOTS+ to certify intermediate trees (and FORS keys), because WOTS+ provides very small signatures as far as HBS schemes go. Depending on the number of layers in the hypertree, we may need several WOTS+ certification signatures, so keeping them small is very beneficial. The fact that WOTS+ is a one-time signature scheme is not a problem, because the trees and keys we use WOTS+ to certify are generated deterministically from

SPHINCS is entirely deterministic once the initial key seed values have been generated. This includes the selection of which FORS key to sign with. When we are given a message

The randomizer

Properties

SPHINCS sounds like it should be a stateful signature scheme - After all the signature on the actual message is issued with a few-time signature protocol, FORS, so ought not signers to know roughly how many times to use each FTS key pair?

But actually SPHINCS is advertised as a stateless signing protocol. You see, if they increase the overall height

We can afford to increase the height of the hypertree this large because of the key-certification trick which saves us from re-deriving all leaf FTS. Even with heavy use, most of the possible FTS keys are never even derived, let alone used. But the fact that they could be used allows for this probabilistic statelessness property.

The modern SPHINCS+ variant of SPHINCS specifically has very small public and secret keys. Pubkeys are only

However SPHINCS signatures are very large. Exact sizes depend on a large number of parameters:

- Security parameter

(hash output size) - SPHINCS parameters

and - WOTS+ parameters

and - FORS parameters

and

The total SPHINCS+ signature size is given by:

Reference (see page 36).

For different parameter sets, this can result in signatures varying in size from 8 kilobytes with the lowest security parameters up to 49 kilobytes for the highest security. See page 43 of the FIPS standard for concrete parameter sets recommended by NIST and their resulting signature sizes.

Viability for Bitcoin

SPHINCS is extremely powerful, and has numerous advantages. Stateless signing would make it a drop-in replacement for existing signature algorithms like Schnorr, so signers would not need to think about exhausting a key or tracking signature counters. The fact that SPHINCS+ is a fully standardized, open source and NIST-recommended signing algorithm would lend more credibility to arguments for adoption.

In the recommended parameter sets, NIST sets

Not even the most heavily used public keys in Bitcoin’s history have signed so many transactions… Except perhaps for the context of the Lightning network, where 2-of-2 multisignature contract participants sign sometimes hundreds of commitment transactions per minute - Though the Bitcoin blockchain rarely ever sees these transactions publicly (perhaps this fact might be useful).

However, SPHINCS’s downfall is its huge signature size. Even the smallest SPHINCS signatures with 128 bit security are around double the size of a FORS signature, and over 100x the size of BIP340 Schnorr.

Analysis

Some of the more modern hash-based signature schemes described above fulfill the hard requirements of Bitcoin:

- Small public and secret keys

- Fast signature verification time

Namely those schemes are:

- WOTS / WOTS+ / WOTS+C (OTS)

- FORS / FORS+C (FTS)

- SPHINCS+ (MTS)

The primary trade-off points between these schemes are:

- Signing and key generation runtime performance

- Signature size

- Number of signatures a signer may safely publish before forgeries become feasible

Here is a brief example to demonstrate scaling problems with hash-based signatures. If every transaction in a Bitcoin block were small-as-possible 1-input-1-output transactions (about 320 weight units before witness data), and each of those transactions used a single signature as their input’s witness, then Bitcoin’s block size limit of 4,000,000 weight units would be exhausted by:

- 490 transactions if they each used a SPHINCS+128 signature of 7856 witness bytes, or

- 875 transactions if they each used a FORS+C signature of 4256 witness bytes, or

- 2976 transactions if they each used a WOTS+C signature of 1028 witness bytes, or

- 10000+ transactions if they each used a BIP340 signature of 64 witness bytes

As of writing, blocks mined today are generally filled with some 3000-5000 transactions each, many of which spend large numbers of inputs.

It seems clear that using hash-based signatures of any kind would necessitate either a significant decrease in overall network throughput, or a significant increase in block size. The increase in block size could be pruned though, as large hash-based signatures could be attached as part of the segregated block witness and not the raw blocks themselves.

Upgrading Bitcoin

None of the above schemes matter unless it can be applied to build a real quantum-resistant upgrade to Bitcoin. In this section, I’ll propose one potential option for upgrading Bitcoin to use post-quantum hash-based cryptography while maintaining compatibility with existing elliptic-curve crypto (ECC) for as long as possible (to minimize performance loss over time).

I am not necessarily advocating to upgrade Bitcoin to use HBS in this particular way; I am merely trying to demonstrate what one possible realistic quantum resistance upgrade to Bitcoin might look like, knowing what I know today. There are far smarter people than me also working on this problem, who will probably have much to say about the methods I describe here.

UPDATE 2024-12-16

Speaking of smarter people than I, Matt Corallo has kindly done us all the favor of making an even better design for a post-quantum upgrade than my idea below (DASK). I would prefer his approach, but with a more succinct and simple hash-based signature scheme like WOTS or FORS. That said, I’ve left my original proposal below untouched outside this little update blurb.

Digests as Secret Keys (DASK)

Digests as Secret Keys (DASK) is my invented name (feat. a pronounceable acronym) for a hypothetical quantum-resistant upgrade path for Bitcoin. As far as I know, nobody else has proposed this idea before, but I would love to be proven wrong. Whether this idea is feasible or not, I would hope at least that I’m not alone.

DASK does not require any immediate consensus changes, but instead encourages a client-side specification change, modifying the way Bitcoin wallets derive their EC secret keys. A consensus change would be required later to retroactively change spending rules, and users would need to migrate their coins to a DASK-supported wallet before that fork, but the on-chain output scripts to which coins are paid would not change, so DASK would not impact Bitcoin’s fungibility, scalability, or consensus rules in the way a brand new output script format would.

Until the DASK consensus change is activated, Bitcoin transactions using EC signatures would look exactly the same as they do today. If large quantum computers never materialize for some reason, DASK need never be activated.

Background

A Bitcoin wallet is typically derived from a secret seed encoded as a mnemonic phrase. The wallet software uses the BIP32 and BIP44 standards to derive child secret/public key pairs from this seed, using a system of hashing and additive tweaking.

I am oversimplifying here, but basically the wallet starts with a secret key

* This describes hardened BIP32 derivation. Unhardened derivation hashes the public key instead of the secret key.

BIP32 encourages wallets to do this recursively, generating a large tree of practically unlimited size and depth, so that clients will never run out of available fresh signing keys. Once a suitable child secret key is derived, the wallet will typically use the EC secret key

DASK Description

The idea of Digests as Secret Keys is to derive a hash-based signature algorithm pubkey (or a hash thereof) and use that in place of the elliptic curve secret key

For example, let’s say we compute

A simplified diagram of the DASK concept. Further layers of derivation after the BIP39 seed

why?

This approach gives us a fallback option to authenticate a user in the event a quantum adversary appears who can invert our EC public key

In a post-quantum world, the Bitcoin network can activate a consensus change which enforces new hash-based spending rules on all addresses: disable ECDSA/Schnorr, and instead require a signature from the HBS public key

Since FORS, WOTS, and other HBS verification algorithms typically involve recomputing a specific hash digest and comparing that to the HBS public key, a verifying Bitcoin node could recompute the EC secret key

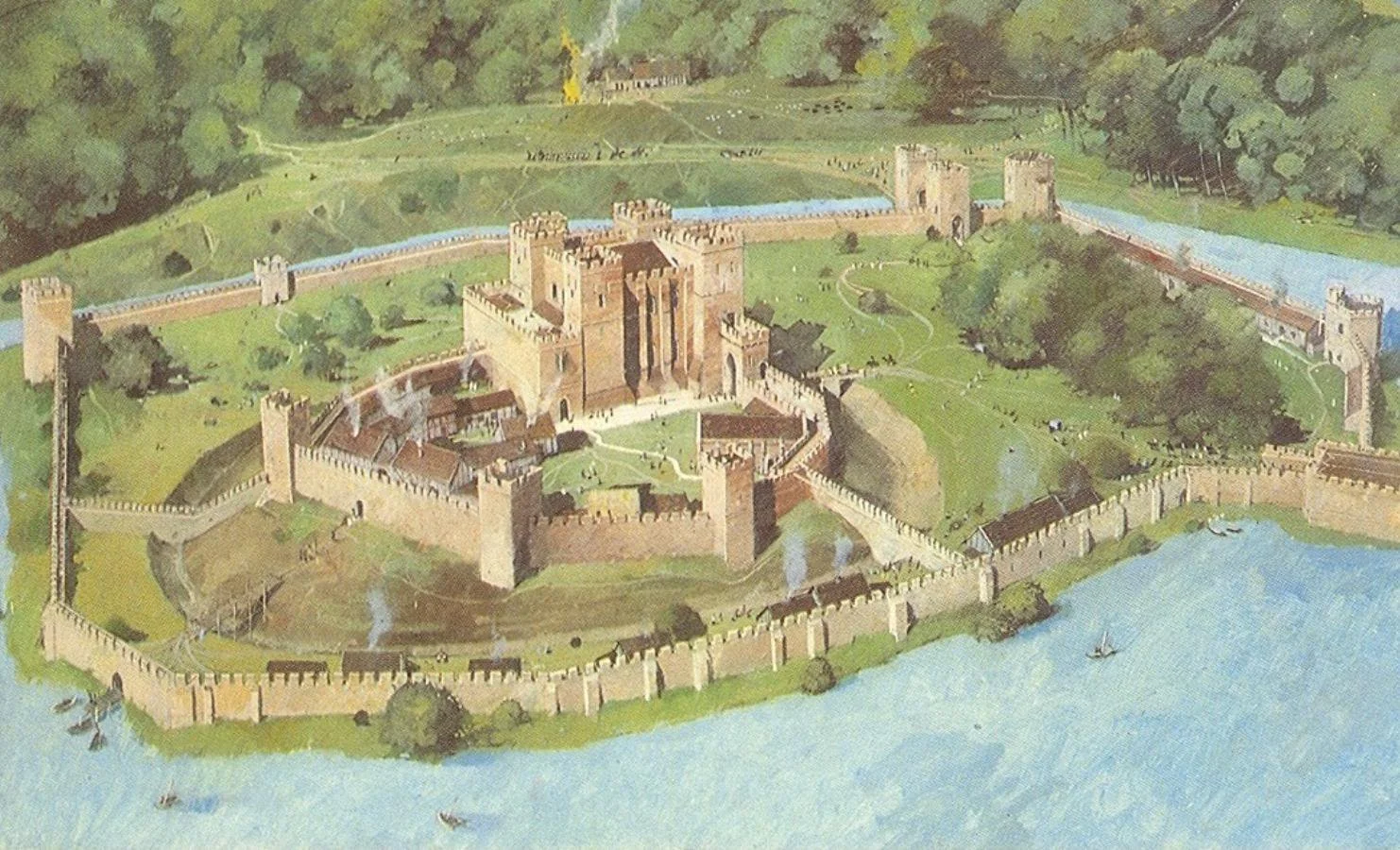

Think of the outer EC pubkey

Kenilworth Castle Reconstruction. Source.

The exception to the above is taproot, where an output public key

There are various ways we could handle this, the most obvious being to simply append the tapscript MAST root

Until Q-Day, Bitcoin users would be free to continue signing with ECDSA and Schnorr exactly as they do today. After Q-Day, the fallback HBS mechanism would allow a straightforward and secure way for users to retain control over their money.

Benefits

The main benefit of DASK is that the Bitcoin network does not need to implement any consensus changes today. Instead a client-side spec for DASK would be needed, and then users can opt into DASK-supported wallets at their leisure, without meaningful changes to user experience. Bitcoin wallet clients could even encourage this migration passively, by paying user change outputs to DASK-supported addresses instead of to pre-quantum BIP32 addresses, and by generating new receive addresses using DASK instead of BIP32. Wallets would be free to migrate coins well ahead of DASK activation. If the DASK consensus changes are never activated, users would not be in any worse position than they were before migrating to DASK.

DASK could also be applied to other post-quantum algorithms besides hash-based cryptography. For instance, an EC secret key

For even better flexibility, the network might treat the hash-based pubkey

After all, it seems probable that we will have access to more efficient post-quantum signing algorithms on Q-Day than we do today, and WOTS signatures are a relatively small, secure, future proof, and simple way to endorse some undefined future pubkey we don’t know how to derive yet. This certification idea mirrors the SPHINCS framework’s approach of using WOTS keys to endorse child keys without needing to know (rederive) the child keys, so there is at least some well-documented research on this use case of HBS.

Drawbacks

Consensus Complexity. Some ingenuity and compromises might be needed to activate DASK as a soft fork rather than as a hard fork. It seems like transactions mined after DASK which include hash-based signatures would be rejected by older Bitcoin nodes, which expect only an EC signature to claim the same output. I’m not sure whether this is even possible and would love to hear suggestions as to what a DASK soft fork might look like.

Performance. A DASK-supported EC key

More investigation and empirical benchmarking would be needed to make hard performance claims here. It seems to me at least that any reasonably small trade-off in key-derivation performance would be a price worth paying for quantum resistance.

HD wallets. There is one notable and certain drawback to DASK though: Without unhardened BIP32, extended public keys (xpubs) will no longer be a thing.

We will be forced to drop BIP32 before Q-Day, because existing wallet software treats BIP32 xpubs as safe to distribute. For example, hardware and multisig wallets surrender them frequently as part of normal UX paths. As discussed earlier, when a QA learns a user’s xpub, they can invert it and then derive all child secret keys. Deriving HBS keys with BIP32 would be counterproductive; a defensive wall built atop quicksand.

Instead we would need a new hierarchical-deterministic wallet standard which uses only cryptographic primitives known to be quantum-secure. Currently the only option I know of would be to hash the secret seed, which obviously cannot be shared publicly.

This could negatively affect various use cases in the Bitcoin ecosystem which frequently depend on the ability to independently derive public keys for wallets which a machine might not know the raw private keys for.

- Watch-only wallets

- Hardware wallets and other airgapped signing devices

- Multisignature wallets

However, this is neither a surprising nor insurmountable problem. Other post-quantum solutions share this issue, and further research will be needed to optimize UX or to build an alternative way to non-interactively derive child addresses from public data.

Conclusion

This deep dive into hash-based cryptography has been an incredible horizon-broadening experience for me. Previously, I saw post-quantum cryptography as a dark art, foreign to my understanding, but upon inspection I found the base premises to be elegant and simple. Hopefully this article has demonstrated that post-quantum cryptography need not be magic. We can use systems we already rely on today, in new and inventive ways, to (we hope) defeat future quantum adversaries.

I’m incredibly grateful to the numerous researchers who have poured thousands of hours into inventing, proving, attacking, and standardizing the HBS algorithms I’ve explored in this article. Check out some of the papers linked in this post, and you will see for yourself the creativity and ingenuity which has gone into their designs.

This post has been weeks in the making and yet still there are numerous ideas I have left out. For instance, I may have discovered an optimization to the Winternitz OTS scheme, which might further reduce its signature size, but to give myself enough time to investigate this properly demands I leave it for another day.

I have also neglected other sectors of post-quantum cryptography like Lattice cryptography, STARKS, and many more. The exciting promises they offer and the worrying trade-offs they demand require greater attention than I can afford them in this post. For further reading in this broad domain, check out the Wikipedia article on post-quantum cryptography.

More Resources

- https://bitcoinops.org/en/topics/quantum-resistance/

- https://delvingbitcoin.org/t/proposing-a-p2qrh-bip-towards-a-quantum-resistant-soft-fork/956/2

- https://bitcoin.stackexchange.com/a/93047

- https://gist.github.com/harding/bfd094ab488fd3932df59452e5ec753f

- https://lists.linuxfoundation.org/pipermail/bitcoin-dev/2018-February/015758.html

- https://bitcoinops.org/en/newsletters/2024/05/08/#consensus-enforced-lamport-signatures-on-top-of-ecdsa-signatures